Introduction

Back in the 1950s, a family’s “home video system” was typically a console television, which had no more than two or three adjustments. In contrast, a modern system commonly includes a Blu-ray Disc player, a receiver, a display, and in some cases a video processor and/or a switcher. Each of these devices may have a dozen or more user-accessible adjustments and mode switches, often with cryptic names that vary from manufacturer to manufacturer.

As a consequence there are a seemingly limitless number of combinations of settings and modes to choose from in most video enthusiasts’ home video systems, all of which affect the picture in some subtle way. Obvious questions arise: Which modes produce the best picture? Are there tradeoffs between the various settings? Is there a systematic way of figuring out which modes to choose?

This article is the first of several that will hopefully help answer those questions. Each one will look at a common setting one would find on a display, player, and/or video processor, and go through the process of evaluating the video image quality in each mode. We’ll also get into some of the theory and technology behind each of the settings, though it’s not really necessary to understand the technology in order to do the evaluation. If you’re uninterested in the video engineering details, you can skip ahead to the “Performing the Evaluation” section below.

This particular article is about how to choose the output color space from your player and/or video processor. We’ll talk a bit about what a “color space” is, why you should care, and how to tell which color space or spaces work best with your combination of equipment.

What is A “Color Space?”

A “color space” is a way of specifying a color numerically, usually as a triplet of numbers representing positions in a three-dimensional “space” of color. Color spaces are three-dimensional because our eyes have three different kinds of color-sensitive cells (called “cone cells” or “cones”), and thus every color space in one way or another must encode three different color intensities. Most people are at least a bit familiar with the way images are formed on a computer monitor or television by combining red, green, and blue dots of varying brightness to form a wide range of colors. That method uses the most common kind of color space, the “RGB” space, named for the colors Red, Green, and Blue.

As it turns out, there is not just one RGB color space. There are an infinite number of RGB color spaces, created by varying several parameters, including the specific hue of red, green, and/or blue to be used for the colored dots in the display, the hue of white used, and the specific way the brightness of the dots in the display varies as the numbers fed into the display vary.

It’s worth noting at this point that the RGB spaces we use all the time in video and computer displays are generally labeled “R’G’B’” (pronounced “R-prime, G-prime, B-prime”) by color scientists because they are “gamma-corrected” spaces. Color scientists reserve “RGB” to refer to non-gamma-corrected (or “linear”) spaces that use red, green, and blue primaries. This distinction is not as commonly used in the video or computer world, and is beyond the purview of this article. Just know that in this article, when we talk about “RGB” we mean R’G’B’ in the language of color science. We’ll talk about this further in a future article about gamma.

You might well ask, “If the way you specify colors is via the percentages of R, G, and B, and different color spaces use different primary colors of Red, Green, and/or Blue, how do you specify the specific primary colors of Red, Green, or Blue used to define the space?” The answer is that you use a fundamental color space, called XYZ, or more formally CIE XYZ. XYZ is a color space that is derived from basic studies of how the eye and brain sense color. It is notable for being an “absolute” color space (meaning that colors are specified directly, not by reference to other colors), and for being able to represent any possible real visible color that a human being can sense. RGB, by contrast, is a “relative” color space, where the colors are specified relative to three “primaries,” which are the colors of red, green, and blue used in that particular space.

There are additional color spaces that can represent any color, almost all derived from XYZ, including “xyY,” “CIELUV”, and “CIELAB”. But displays always use some form RGB as their fundamental color space, for the simple reason that real-world displays can’t show all colors. They can only show colors that can be mixed from their specific RGB primaries, so it’s not useful to send them colors they can’t display.

In the world of high definition video, there is one very common RGB space specified in an ITU standard called BT.709, or sometimes Rec. 709 (for “Recommendation number 709”). It specifies (in an absolute space) the specific colors of red, green, and blue that must be used in a conforming display, and what color of white the display needs to produce when all three primaries are at full brightness. There is no current standard for display gamma, which is how the brightness of each pixel varies as the input voltages or digital values vary, but there is a common understanding based on using the gamma of the CRTs used in video mastering.

Video and Y’CbCr

Given that video displays are fundamentally RGB devices and all share a common RGB color space, specified in BT.709, you’d expect that the primary color space used to transmit and store video would be BT.709 RGB. But in fact, even though video cameras physically measure RGB values, and displays are made using RGB primaries, video is stored, transmitted, and processed in a color space called Y’CbCr, or sometimes informally “YUV.”

Y’CbCr is the latest version of a set of color spaces that were developed in the early days of color television. The broadcasters and the FCC wanted to make color television backward compatible with black-and-white television, so all the people who owned black-and-white televisions wouldn’t find them obsolete when color broadcasting started. Unfortunately, there wasn’t enough room to broadcast both a full-color signal and a black-and-white signal within the frequency band owned by a single television station. It was necessary to find a way to send both a compatible black-and-white signal and a color add-on signal that could be combined with the black-and-white signal to produce a full color signal.

Since there was very little room in the frequency band for even a color add-on signal, it was necessary to make the color add-on very low resolution. This worked out OK because your eyes are much less sensitive to color resolution than to brightness resolution. Another way of looking at it is that the viewer’s perception of how sharp the picture is depends mostly on the main black-and-white signal, with the extra color signal adding almost no additional sharpness. Thus the color signal can be, in effect, a somewhat rough and blurry overlay.

The main black-and-white signal is carried in a single channel called Y’ (pronounced Y-prime), and the low-resolution color signal is carried in two channels, labeled Cb and Cr, also called “color difference” signals, because they are derived from B-Y’ and R-Y’. Y’ itself is a weighted combination of R, G, and B, using specific weights that are designed to make Y’ approximate perceived brightness.

Y’CbCr is a handy color space for storing and broadcasting video, because the Y’ signal can be stored or sent at very high resolution, and Cb and Cr can be stored or sent at low resolution without causing the final image to look significantly worse. In effect it’s a very simple lossy compression scheme, throwing away portions of the image that are less important for perception (the detailed color information) in order to devote more resources to the important stuff (the black-and-white details).

As with RGB, there are a potentially infinite number of possible Y’CbCr color spaces, varying primarily in the weights of R, G, and B

that are combined to form the Y’ signal. Luckily, there is a standard for high-definition video, the aforementioned BT.709, which gives specific mathematical functions for converting RGB to and from Y’CbCr. (Standard definition video uses a different standard, BT.601, but it’s becoming less and less relevant as more content is being produced in HD or upconverted to HD.)

Subsampling

In the old days of color TV, the Cb and Cr channels (which just to be precise weren’t called “Cb” and “Cr” at the time, but that’s not important to this discussion) were reduced in resolution via an analog lowpass filter, which stripped out detail and allowed the color signal to fit in the tiny amount of broadcast bandwidth available for the extra color information. But in the digital era, the Cb and Cr signals are reduced in resolution via the simple expedient of scaling them down to a smaller number of pixels.

The process of scaling the color portions of an image to a lower resolution is called “subsampling” or “downsampling,” and scaling the color back to the original resolution is called “upsampling.” Either one can also be called “resampling.” All of these operations are identical in practice to scaling the color channels, just like scaling an image from one pixel size to another. There are a variety of different resolutions that can be stored or sent, and we often think of these various color resolution options as a different color space. This isn’t strictly true, as technically speaking the color space remains the same no matter how the color channels are scaled, but it’s still relatively common to speak of changing color spaces when one is actually changing color subsampling modes.

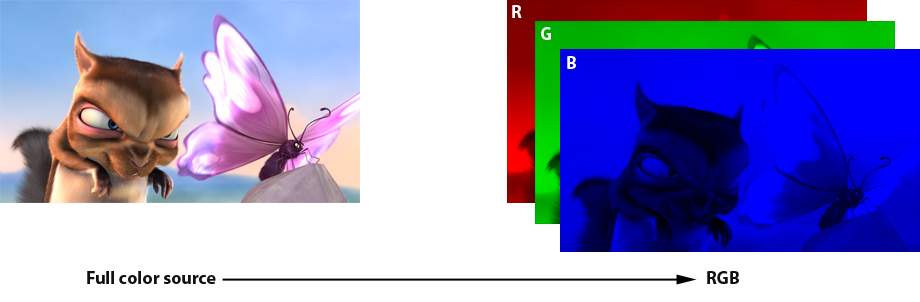

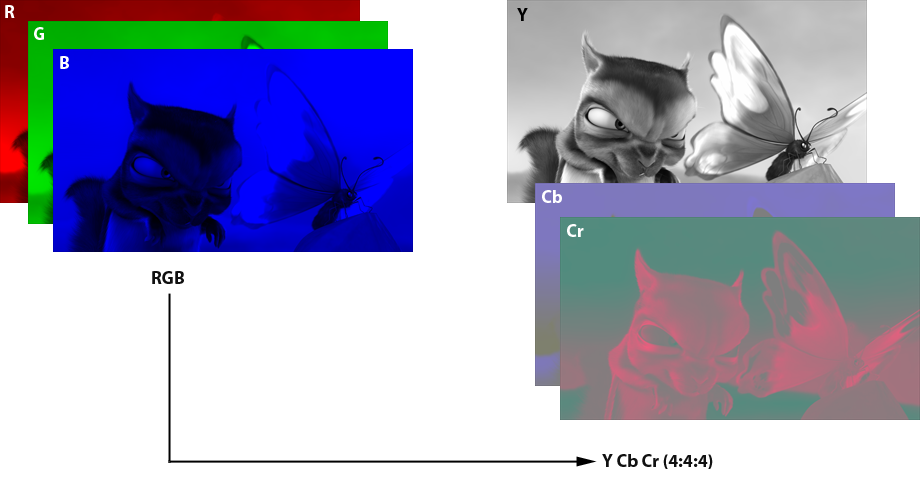

Original to RGB

A full color image can be thought of as three separate color channels: Red, Green, and Blue. This is the basic way images are displayed on any current digital monitor or television.

RGB to 4:4:4

The color space called 4:4:4 is a Y’CbCr color space where the Cb and Cr channels are not downsampled at all, but are stored at full resolution. The “4:4:4” nomenclature is based on a way of thinking about chroma subsampling from the old days of analog video, where sampling was potentially different for the odd scan lines and the even scan lines. “4:4:4” means that for every 4 Y’ pixels, there are 4 Cb and Cr pixels on the even scan lines and 4 Cb and Cr pixels on the odd scan lines. This format is expensive to store and transmit, so is only used for storage of very high-end professional master video. But it is often available as an output format from a player or video processor. Keep in mind that the player or video processor does not have access to the original 4:4:4 channels, but must scale up the lower-resolution 4:2:0 channels (see below).

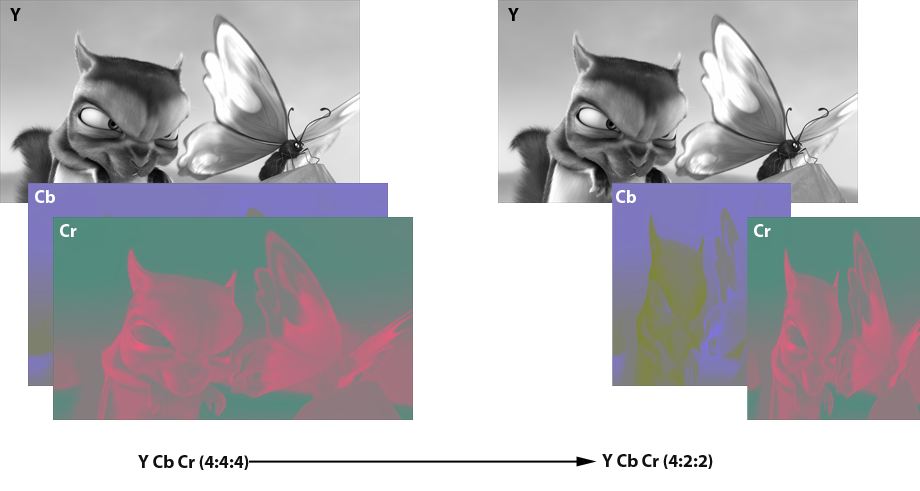

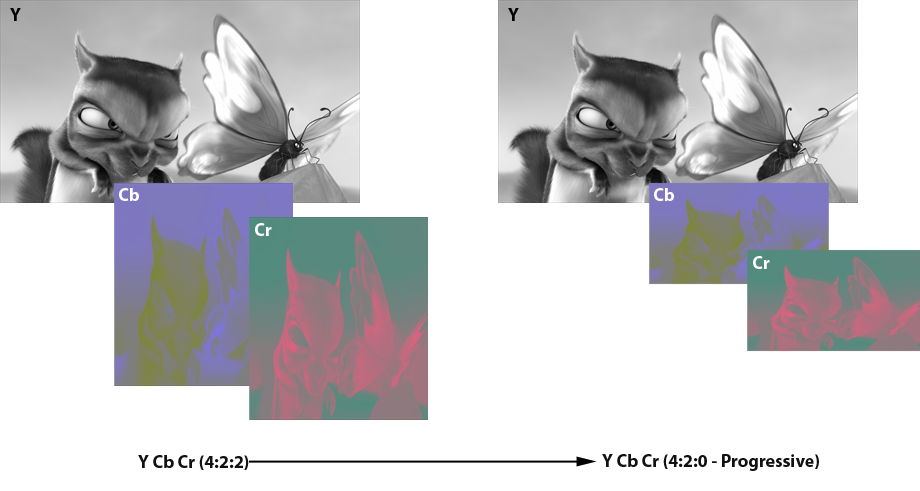

4:4:4 to 4:2:2

For professional video, the most common format is 4:2:2, which means for every 4 Y’ pixels, there are 2 Cb and Cr pixels on the even lines and 2 Cb and Cr pixels on the odd lines. Again, it really works out to scaling the Cb and Cr by ½ in just the horizontal direction, but leaving the vertical unchanged. So each 1920×1080 image in 4:2:2 has the Cb and Cr stored at 960×1080. To display an image stored in this format, the Cb and Cr channels only need to be scaled horizontally.

4:2:2 to 4:2:0 – Progressive

The subsampling format that is used on modern consumer video delivery media like Blu-ray Disc and DVD is called 4:2:0. This confusing designation means that for every 4 Y’ pixels on the even scan lines, there will be 2 Cb and Cr pixels and for every 4 Y’ pixels on the odd scan lines there will be 0 Cb and Cr pixels. In practice, it really means that the Cb and Cr portions of the image are scaled by ½ in both dimensions. So if the resolution of the overall image is 1920×1080, for example, the Cb and Cr portions of the image will be at 960×540 resolution. In order to display an HD image that is stored in this format, the Cb and Cr channels need to be scaled back up to 1920×1080 by interpolating values for the missing pixels, and then each pixel can be converted to RGB using the Y’CbCr->RGB algorithm specified in BT.709.

Given that all the various shiny-disc and broadcast video formats use 4:2:0 natively, one might assume that players would just send the video to the display in that format. But as it turns out, video players are basically required to at minimum convert the video to 4:2:2 in order to send it to the display, because there are standards for storing 4:2:0, but no standards for sending it to a display. While only 4:2:2 is required, many players now also offer the ability to go further and convert the video to 4:4:4, or even RGB.

And now we get to the meat of this guide. What format should you set your player to output? If you have a video processor, what format should you feed it, and what format should you have it produce? Or does it even matter?

The answer, as with so many other things in life, is, “It depends.”

The Conversion Chain

Let’s consider the process necessary to get video off a shiny disc (or from a digital broadcast or cable channel). First the video needs to be converted from 4:2:0 to 4:2:2, then to 4:4:4, then to RGB, and finally it can be fed to the display controller. This is the same process no matter what display technology is being used, whether LCD, DLP, plasma, or CRT. It’s possible to shortcut the process slightly by going directly from 4:2:0 to 4:4:4, but in practice this isn’t used as often as you’d think.

If you choose to output 4:2:2 from your player to the display, then the display will need to do the scaling to 4:4:4 and then to RGB. If you output 4:4:4 to the display, the display will not need to do any scaling at all, but will need to do the conversion to RGB. If you output RGB to the display, then the display can avoid all conversion steps and send the signal right to the controller. No matter which you choose, the same conversion steps are still happening; all you are choosing is which device is performing the conversion.

There’s no specific reason that a display or a player would be the optimal place to do these conversion steps. In theory doing the conversion in the display minimizes the amount of data that has to flow across the HDMI link, but in practice HDMI is more than adequate to handle any format all the way up to 4:4:4 or RGB.

So the key to choosing the right color space to output is finding out which device does a better job of converting color spaces. This is not always easy to evaluate, and it’s quite possible for one device to do a better job in one area, like 4:2:2 to 4:4:4, but do worse in another area, like 4:4:4 to RGB.

You’d think that if a display handles a 4:2:2 input signal well, then feeding it an RGB signal would be no worse, but in fact some displays do extra work when they are fed RGB, because they convert the signal back to 4:4:4 or even 4:2:2! This happens because one or more of their internal processing chips is designed only for one color format. So for these displays, sending in any format other than the one it will use for internal processing will only add extra processing and potentially degrade the image.

The same logic applies to video processors. If the processor does all its work in 4:2:2, there’s no advantage to sending it RGB or 4:4:4, and in fact there may be a disadvantage.

Unfortunately device makers tend not to reveal the exact processing steps they use internally, or the algorithms they use to convert various color spaces to RGB. Some use different algorithms depending on which color space is fed in. The bottom line is to assume nothing, and test every combination.

Performing The Evaluation

Here’s how you can decide which color space mode works best with your display and player combination, using the Spears & Munsil High Definition Benchmark. If you change any component in your system, either player, display, or processor, you’ll want to run the evaluation again. There’s no easy way to predict what will produce the best results with a particular combination of components.

Scoring Form

We’ve helpfully provided a PDF file you can download and print out, so you can try all the output modes from your player and evaluate which one works best.

Before you start, you’ll want to check that the settings for brightness, contrast, color, tint, and sharpness are all calibrated properly for each of the color space modes. Some displays have separate memories for every input mode, so you might find that even if the display is adjusted properly when it is being fed 4:2:2, the settings change when it gets a 4:4:4 or RGB input signal.

Start by setting the output on the player to 4:2:2. Run through the basic calibration steps for brightness, contrast, color, and tint (using the articles on the Spears & Munsil web site as a guide). Then switch the output on the player to 4:4:4 and run through the calibration again. You may not need to adjust anything. If your player has RGB mode, do the calibration again for that mode. If you have even more modes, you may need to print out more forms and write in the names of the other modes you want to compare.

For the color temperature and/or gamma adjustments, unless you have special test equipment you won’t be able to calibrate these settings. Just make sure that these settings are set the same for all the color space modes. If your player only has one set of settings that is correct for all of the color space modes (which is the most common case), you don’t need to fill in this section. Just do the calibration once and then make sure it continues to work in the other picture modes.

Once you are sure that you have the correct settings for each input picture mode, run through the various tests putting a check in the box for pass, and leaving the box unchecked for fail. When you’re done, hopefully one mode will have the most boxes checked, and most of the time that will be the preferred mode to use. In some cases, you may find that one specific issue is more distracting for you than the others, and in that case you’ll want to choose among the modes that doesn’t have that particular problem.

If you can select modes in both your player and your video processor, our recommendation is to start by trying the various modes in your processor, leaving the player in factory default mode. Choose the output mode that scores best and set the processor in that mode, then move to the player and evaluate all the various modes the player can produce. If you end up changing the player’s output mode, you may want to return to the processor to re-evaluate in case the input mode affects the processor’s output. If you want to be completely comprehensive, you may want to try every possible combination of player and processor mode separately.

Player -> Display

Player -> Receiver -> Display

Important note: If you are running the HDMI signal through a receiver or switcher and find problems, especially with clipping, you should try taking the receiver or switcher out of the chain and connecting the player directly to the display to see if that fixes the problem. There are several receivers, switchers, and video processors that will clip the signal passing through them, even if they aren’t doing any processing of the image. Also check the web sites of the manufacturer of your receiver or switcher to see if there is a new firmware, as this might correct some or all of the errors.

Let’s take a look at the various test patterns you’ll want to look at and what to look for in each:

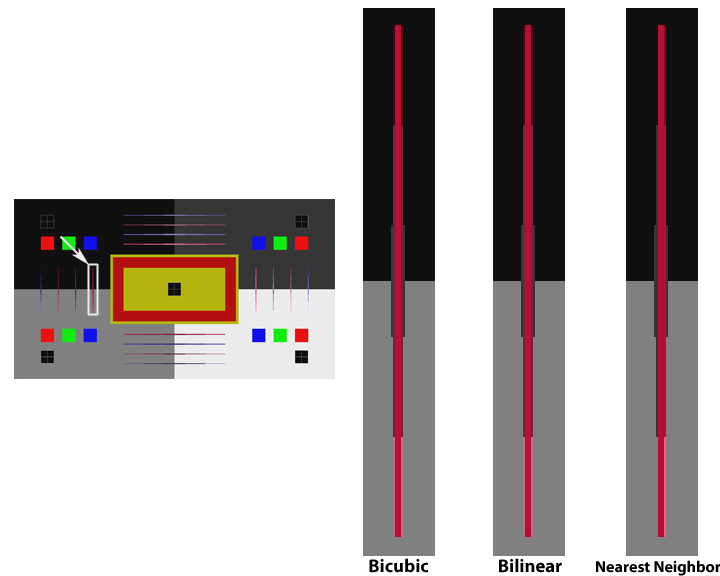

Chroma Alignment

This pattern contains shapes in various color combinations that are designed to show any misalignment between the chroma channels and the luma channel. These misalignments can be caused by mistakes or shortcuts in the chroma upsampling, and it’s not uncommon to find that changing the format sent from the player to the display changes the amount of chroma misalignment.

The primary things to look at are the long thin diamond shapes on the left, right, top, and bottom of the screen. Each of them has a single straight line of chroma pixels laid on top of a long skinny diamond in the luma channel. When the alignment is correct, the chroma should be centered on the diamond, and the diamond should look completely symmetrical. Most people find it easiest to see the alignment clearly against the gray background. The difference can be quite subtle, on the order of a half-pixel shift.

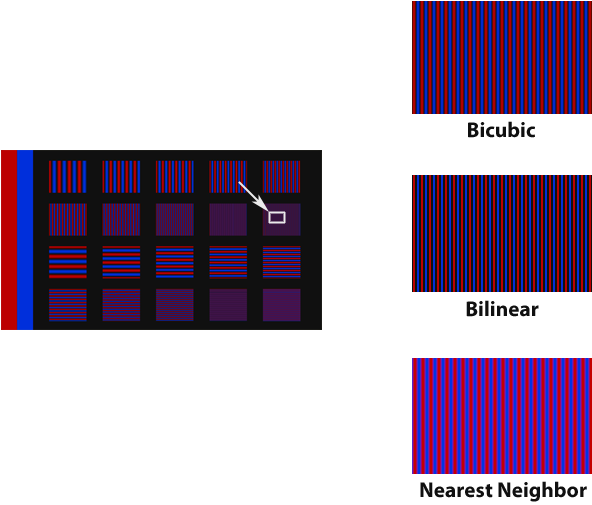

You can also often see difference (if any) in the chroma upsampling algorithm. Nearest neighbor will have very sharp chroma transitions, but will have a half-pixel shift to the right in the chroma channel. Bilinear and bicubic will produce a softer, but more accurate, chroma channel, with smoothly rolled-off edges. Don’t be fooled by the sharp look of nearest neighbor; on this pattern it often looks sharper, but it will make the finished image look jagged. See the example image to see what the various upsampling approaches tend to look like.

Put a check in the row labeled “Alignment correct” for any mode where the chroma lines are centered in their diamonds. If multiple modes have properly aligned chroma, put a check for all of them. If none of them are properly aligned, put a check for the mode that is the closest to correct, or for none of them if none of them are close to correct.

Also compare the image on screen to our example images for the various chroma upsampling approaches. If you’re not sure what kind of upsampling is being used, you may want to look at the bursts and zone plate patterns and compare them to our samples as well. If you don’t see clear stairstepping in any of those patterns, it’s reasonable to assume that the upsampling is using bilinear or better.

Chroma Alignment

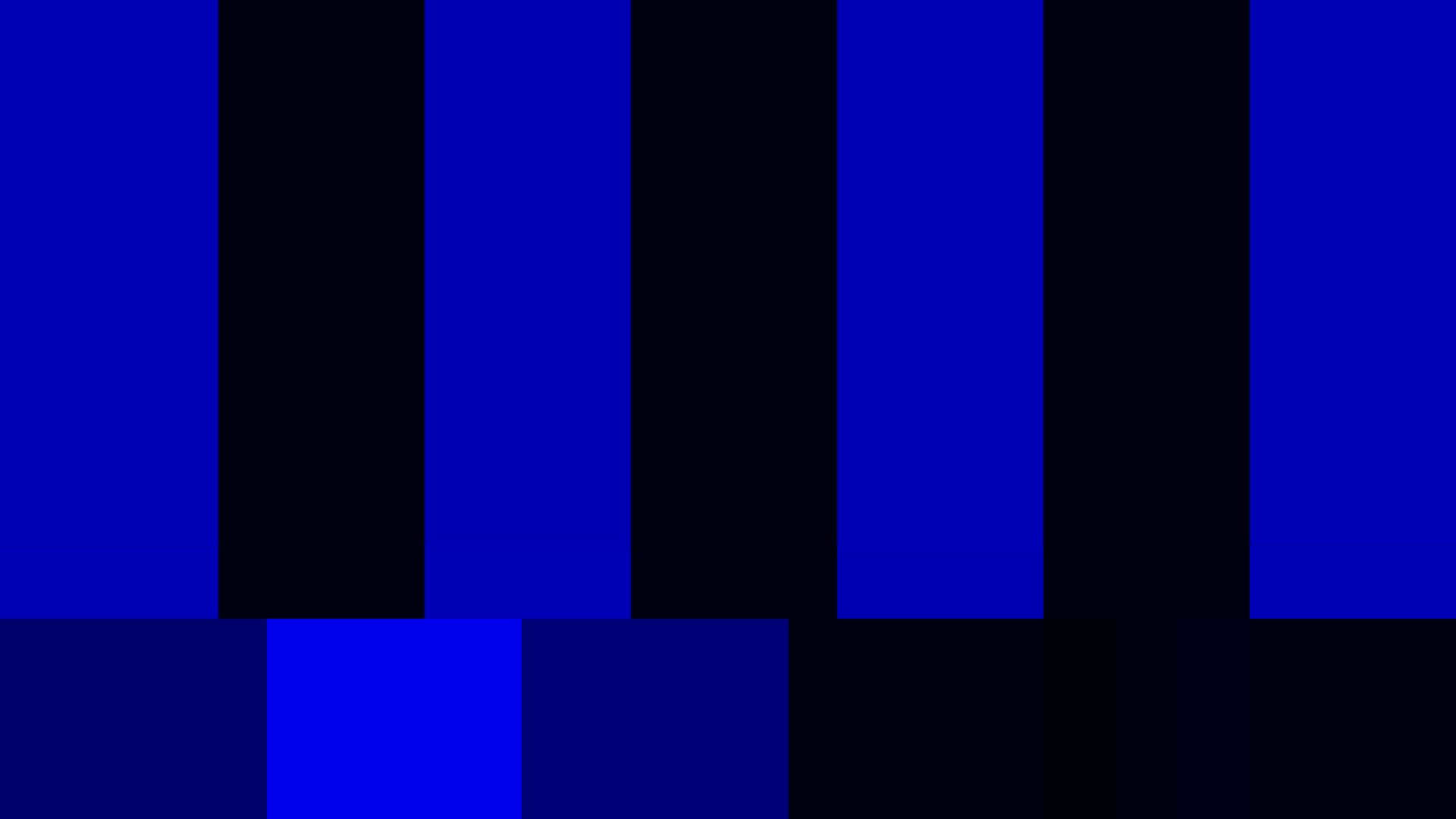

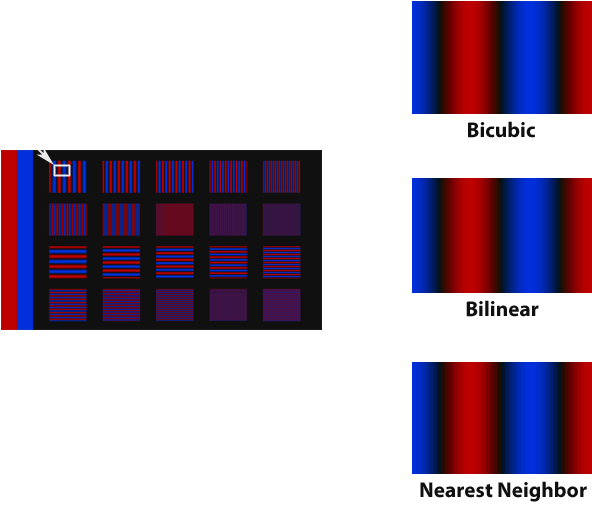

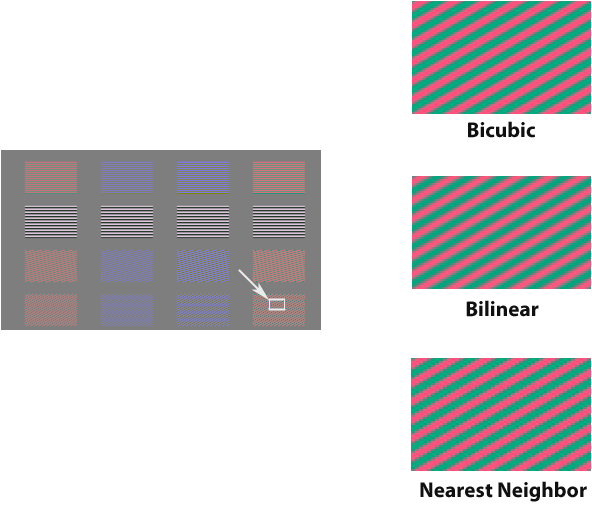

Chroma Multiburst

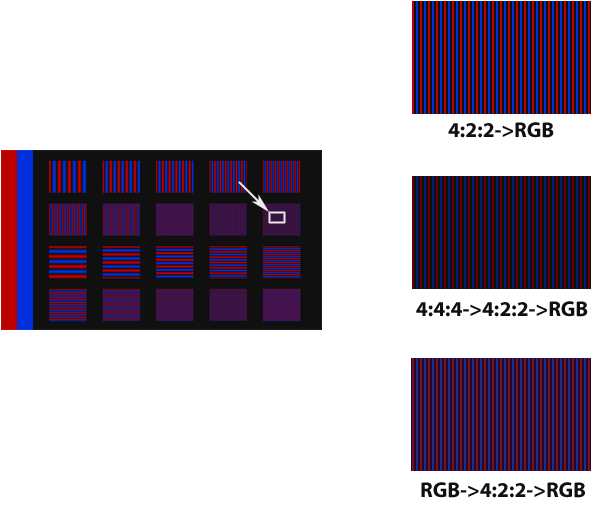

This pattern has ten horizontal bursts in two rows of five on top, and ten vertical bursts in two rows of five on the bottom. The horizontal bursts show how well the video playback chain is reproducing horizontal chroma resolution, and the vertical bursts show how well the video playback chain is reproducing vertical chroma resolution.

For this pattern, look at the highest-frequency bursts, which are on the lower right of both the horizontal and vertical sections. They should have clear, bright colors that look identical to the colors in the other bursts. If the colors are muted, or the burst looks solid gray or any other color, it shows that chroma resolution is being lost during one of the upsampling conversions. If the horizontal burst is muted, that shows a problem in the 4:2:2->4:4:4 conversion. If the vertical burst is muted, that shows a problem in the 4:2:0->4:2:2 conversion.

Another thing that’s fairly easy to tell from this pattern is the quality of the chroma upsampling being done. If the chroma upsampling is being done using an algorithm called “nearest neighbor” then each chroma pixel is just being copied four times to make the new upscaled chroma image. This is fast and easy, but produces blocky, jagged color contours in the final image. Bilinear upsampling uses a linear interpolation algorithm to create the replacement pixels when it scales up the chroma channel, and looks much better. Bicubic upsampling uses two cubic interpolation curves to produce a very smooth and clean chroma channel, and is generally considered the best commonly used algorithm. Take a look at our sample image to get an idea of how this pattern will vary when upsampled with different algorithms.

Put a check in the row labeled “High-frequency detail” for all modes that have clean, bright, colorful high-resolution chroma bursts. If no modes have good bursts, put a check for the mode that has the best-looking ones.

Put a check in the row labeled “Upsampling bilinear or Better” if the upsampling is clearly something better than Nearest Neighbor.

Chroma Multiburst – Low Frequency Burst

Chroma Multiburst – High Frequency Burst

Chroma Multiburst – Multiple Conversions

Chroma Zone Plate

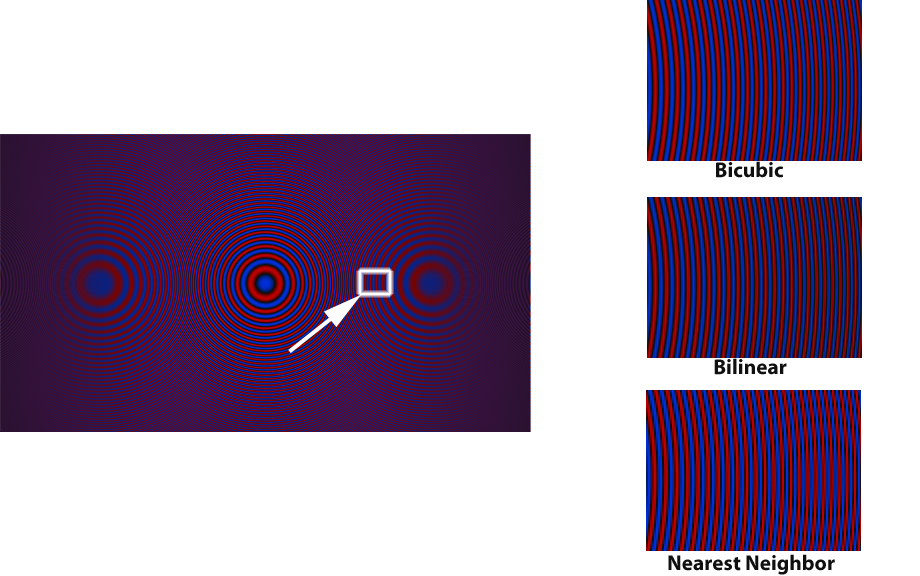

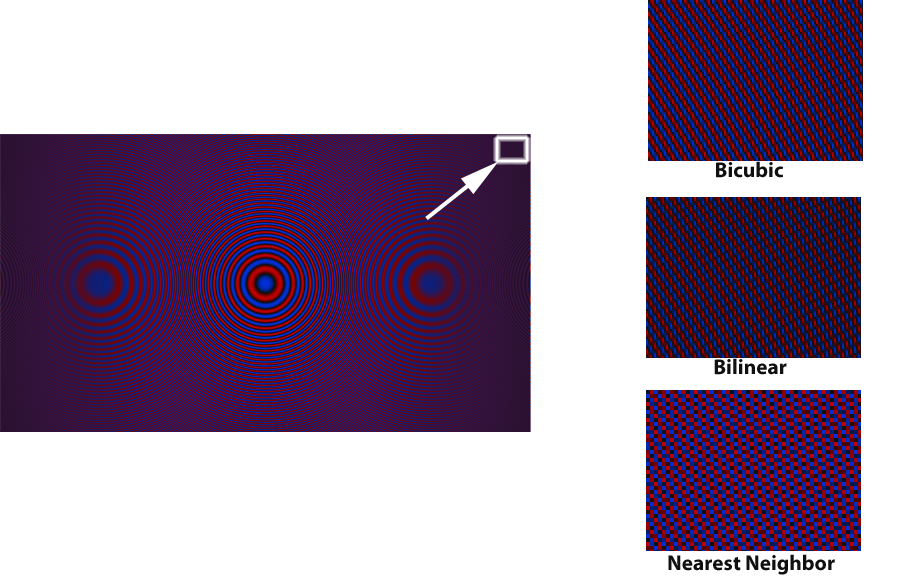

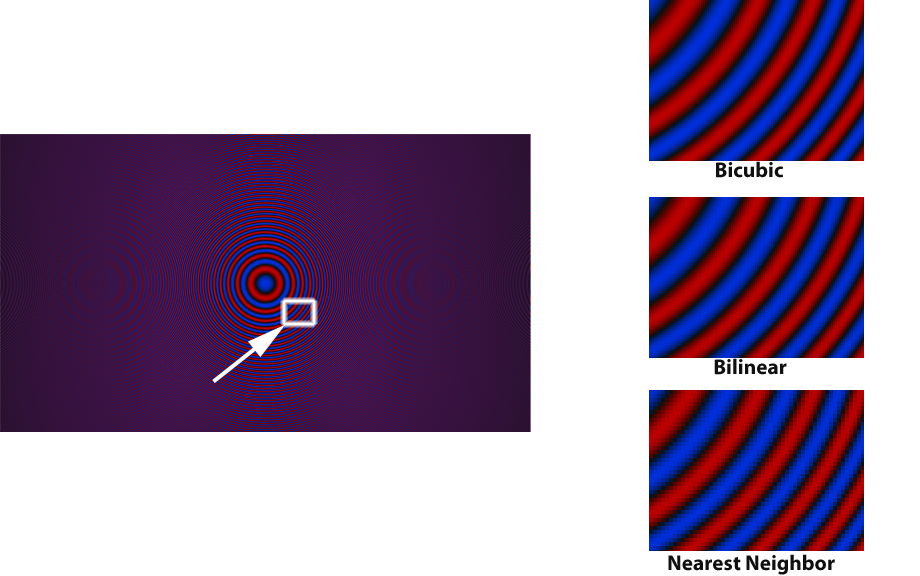

This pattern is a good “at a glance” pattern to see problems and issues with the chroma channels. It has diagonals and high-frequency details that make it possible to see quickly if there are problems with the chroma, once you know what the pattern should look like.

This pattern should have clean, clear colors all the way to the edge of the image, with no obvious loss of color intensity in the corners. If the corners are not as colorful as the center, or look like a solid color, that shows loss of the highest chroma resolution.

The other thing to look at is the amount and strength of the moiré in the pattern. Moiré is a visual impression of false curves caused by aliasing of the true curves in the image. It looks like an optical illusion of sorts, showing concentric circles on the left and right sides of the screen, similar to the actual concentric circles radiating out from the center. There is always a bit of moiré in this pattern even when everything is perfect.

After viewing this pattern with all of the different output modes selected sequentially, put a check in the row labeled “Minimal moiré” for the mode that has the smallest amount of moiré. If all of them have the same amount of moiré, put a check in all of them.

Put a check in the row labeled “Corner detail” for all of the modes that show the corners of the image at full intensity with no falloff in brightness or colorfulness.

Chroma Zone Plate – Minimal Moiré

Chroma Zone Plate – Corner Detail

Chroma Zone Plate

Chroma Zone Plate

Chroma Upsampling Error

This pattern was designed to test for the Chroma Upsampling Error in MPEG decoders, but it’s also useful for checking the smoothness of chroma upsampling. It has diagonal chroma bursts in the bottom two rows that make it easy to see any jaggedness or stairstepping in the chroma channel. As you choose different output modes from the player, if there is a big difference between the quality of the upsampling algorithms, you’ll see the diagonals vary between smooth and jagged. The best quality upsampling will generally produce the smoothest diagonals on these lines.

After viewing this pattern with all of the different output modes selected sequentially, put a check in the row labeled “Diagonals smooth” for the mode that has the smoothest-looking diagonal lines. If they all look the same, put a check in all the boxes. You might also want to look at the diagonals and curves in the Chroma Zone Plate pattern as well. Sometimes it’s easier to see the differences on one or the other depending on the specific display.

Chroma Upsampling Error

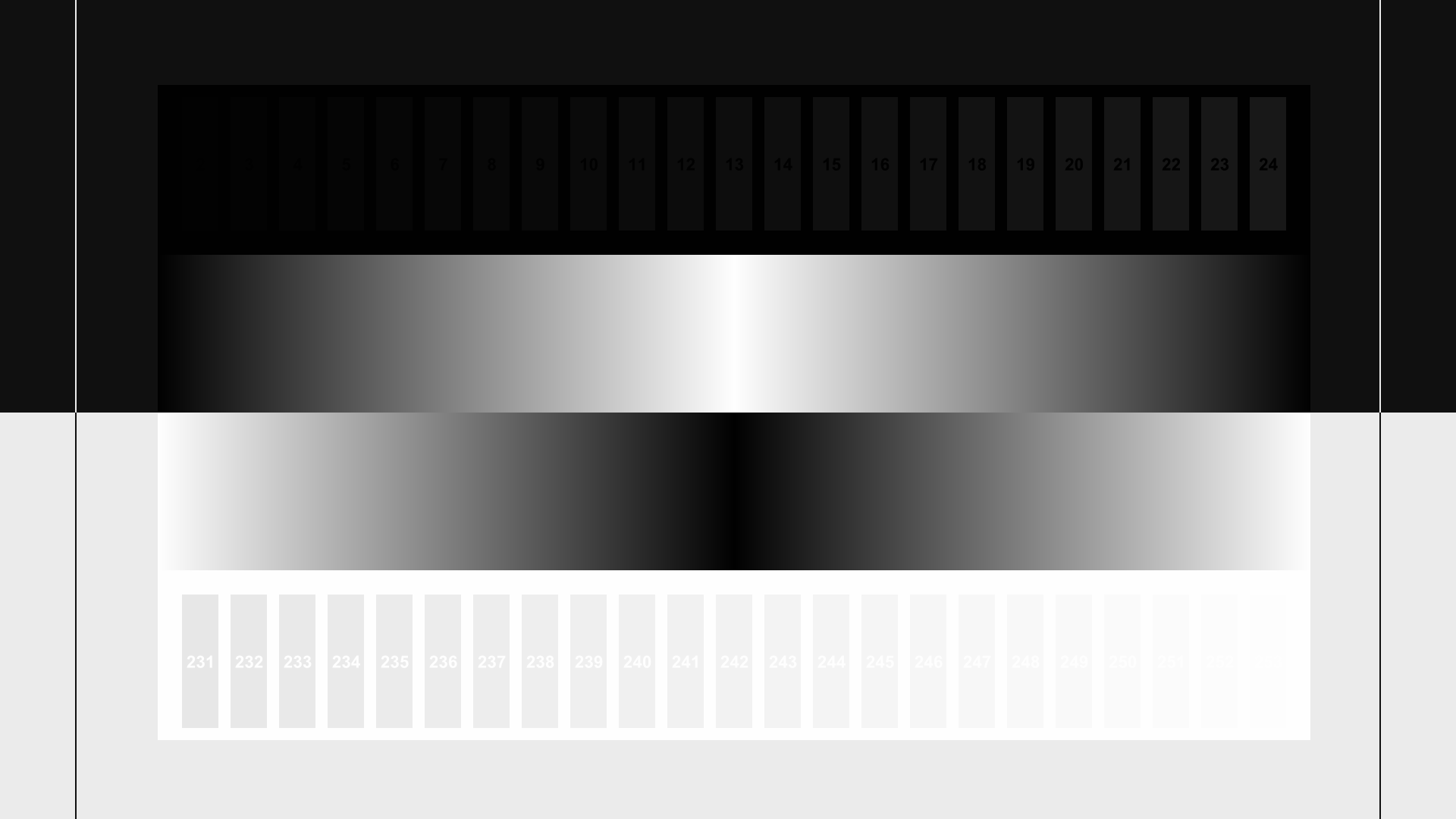

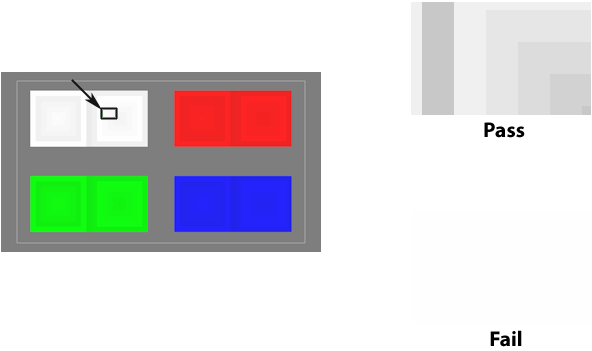

Clipping

This pattern tells you if any of the primary color channels is being clipped above the reference white level at any point in the chain. There are some popular HDMI transmitter chips that clip the Y’ channel when converting 4:2:2 to 4:4:4 or RGB, so when using a player with one of these chips, setting the player to output anything other than 4:2:2 produces a hard clip in the Y’ channel. A telltale sign of this is that the Y’ (white) channel is clipped, but the red, green, and blue channels are not clipped, or at least not completely clipped.

Put a check in the row labeled, “White not clipped” if the white portion of the pattern shows concentric squares rather than a large solid square.

Put a check in the row labeled, “Red, green, and blue not clipped” if the red, green, and blue portions of the pattern show concentric squares rather than a large solid square.

Clipping